Disclaimer

This template is only available on n8n self-hosted as it's making use of the community node for MCP Client.

Who this is for?

The Scrape Web Data with Bright Data and MCP Automated AI Agent workflow is built for professionals who need to automate large-scale, intelligent data extraction by utilizing the Bright Data MCP Server and Google Gemini.

This solution is ideal for:

-

Data Analysts - Who require structured, enriched datasets for analysis and reporting.

-

Marketing Researchers - Seeking fresh market intelligence from dynamic web sources.

-

Product Managers - Who want competitive product and feature insights from various websites.

-

AI Developers - Aiming to feed web data into downstream machine learning models.

-

Growth Hackers - Looking for high-quality data to fuel campaigns, research, or strategic targeting.

What problem is this workflow solving?

Manually scraping websites, cleaning raw HTML data, and generating useful insights from it can be slow, error-prone, and non-scalable.

This workflow solves these problems by:

-

Automating complex web data extraction through Bright Data’s MCP Server.

-

Reducing the human effort needed for cleaning, parsing, and analyzing unstructured web content.

-

Allowing seamless integration into further automation processes.

What this workflow does?

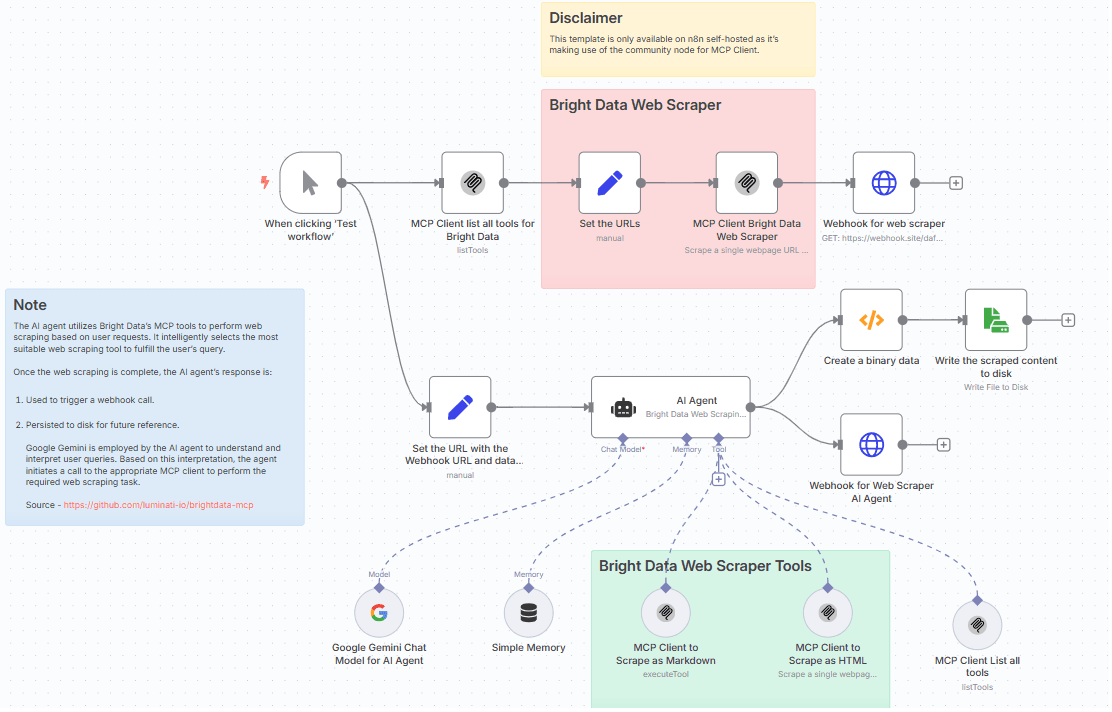

This n8n workflow performs the following steps:

-

Trigger: Start manually.

-

Input URL(s): Specify the URL to perform the web scrapping.

-

Web Scraping (Bright Data): Use Bright Data’s MCP Server tools to accomplish the web data scrapping with markdown and html format.

-

Store / Output: Save results into disk and also performs a Webhook notification.

Setup

- Please make sure to setup n8n locally with MCP Servers by navigating to n8n-nodes-mcp

- Please make sure to install the Bright Data MCP Server @brightdata/mcp on your local machine.

- Sign up at Bright Data.

- Create a Web Unlocker proxy zone called mcp_unlocker on Bright Data control panel.

- Navigate to Proxies & Scraping and create a new Web Unlocker zone by selecting Web Unlocker API under Scraping Solutions.

- In n8n, configure the Google Gemini(PaLM) Api account with the Google Gemini API key (or access through Vertex AI or proxy).

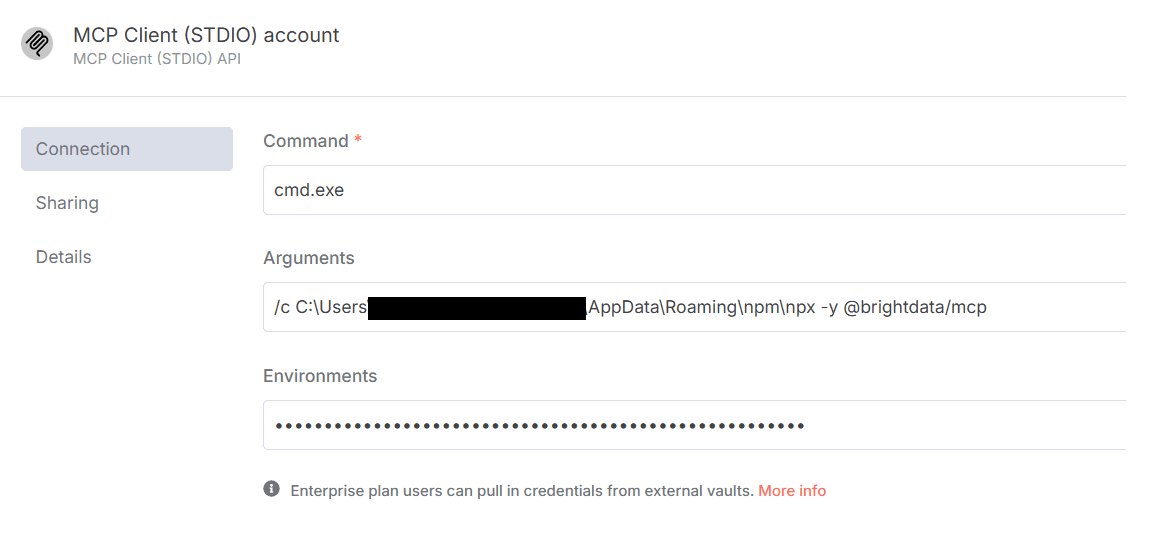

- In n8n, configure the credentials to connect with MCP Client (STDIO) account with the Bright Data MCP Server as shown below.

Make sure to copy the Bright Data API_TOKEN within the Environments textbox above as API_TOKEN=<your-token>.

8. Update the LinkedIn URL person and company workflow.

9. Update the Webhook HTTP Request node with the Webhook endpoint of your choice.

10. Update the file name and path to persist on disk.

How to customize this workflow to your needs

-

Different Inputs: Instead of static URLs, accept URLs dynamically via webhook or form submissions.

-

Outputs: Update the Webhook endpoints to send the response to Slack channels, Airtable, Notion, CRM systems, etc.