Build an AI Agent which accesses two MCP Servers: a RAG MCP Server and a Search Engine API MCP Server.

This workflow contains community nodes that are only compatible with the self-hosted version of n8n.

Tutorial

Click here to watch the full tutorial on YouTube!

How it works

We build an AI Agent which has access to two MCP servers:

- An MCP Server with a RAG database (click here for the RAG MCP Server

- An MCP Server which can access a Search Engine, so the AI Agent also has access to data about more current events

Installation

-

In order to use the MCP Client, you also have to use MCP Server Template.

-

Open the MCP Client "MCP Client: RAG" node and update the SSE Endpoint to the MCP Server workflow

-

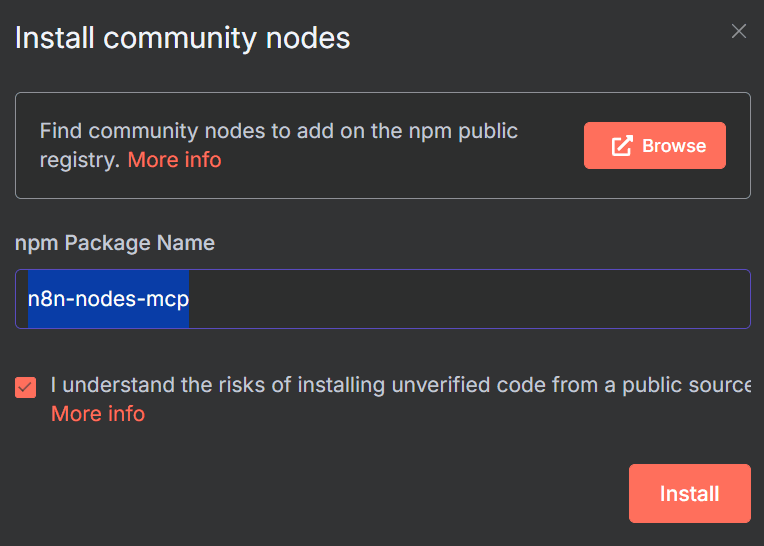

Install the "n8n-nodes-mcp" community node via settings > community nodes

-

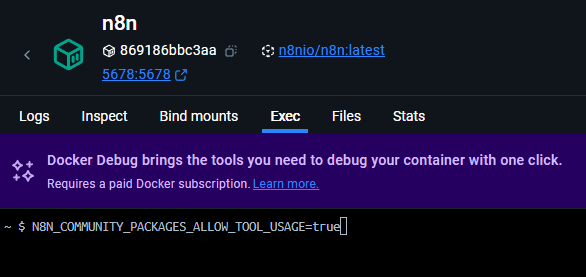

ONLY FOR SELF-HOSTING: In Docker, click on your n8n container. Navigate to "Exec" and execute the below command to allow community nodes:

N8N_COMMUNITY_PACKAGES_ALLOW_TOOL_USAGE=true

-

Navigate to Bright Data and create a new "Web Unlocker API" with the name "mcp_unlocker".

-

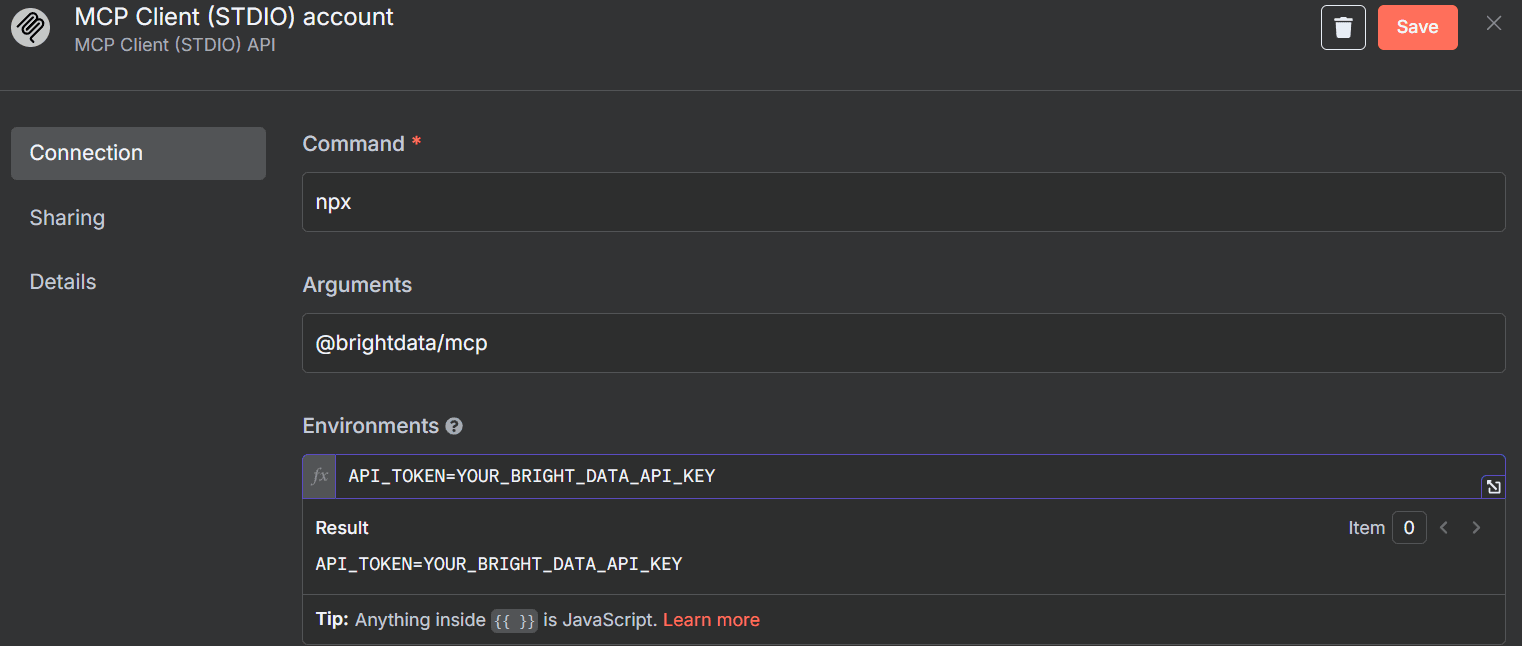

Open the "MCP Client" and add the following credentials:

How to use it

- Run the Chat node and start asking questions

More detailed instructions

Missed a step? Find more detailed instructions here: Personal Newsfeed With Bright Data and n8n

What is Retrievel Augmented Generation (RAG)?

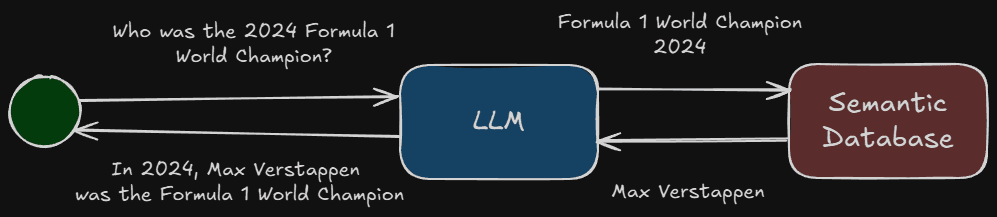

Large Language Models (LLM's) are trained on data until a specific cutoff date. Imagine a model is trained in December 2023 based data until September 2023. This means the model doesn't have any knowledge about events which happened in 2024. So if you ask the LLM who was the Formula 1 World Champion of 2024, it doesn't know the answer.

The solution? Retrieval Augmented Generation. When using Retrieval Augmented Generation, a user's question is being sent to a semantic database. The LLM will use the information retrieved from the semantic database to answer the user's question.

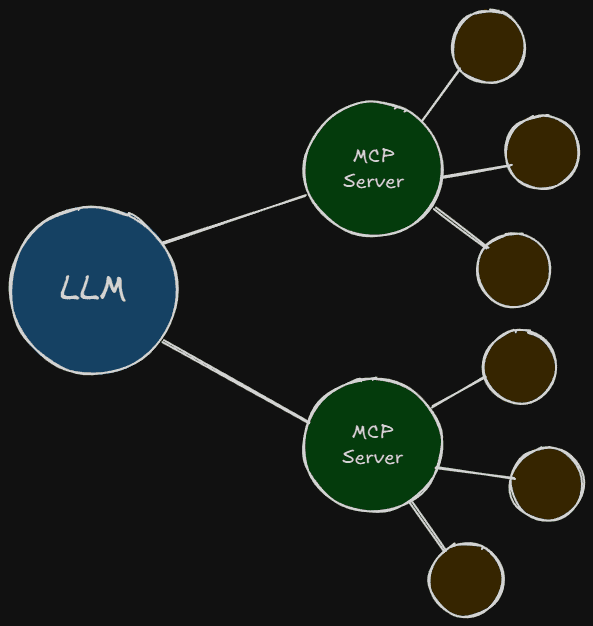

What is Model Context Protocol (MCP)?

MCP is a communication protocol which is used by AI agents to call tools hosted on external servers.

When an MCP client communicates with an MCP server, the server will provide an overview of all its tools, prompts and resources. The MCP server can then choose which tools to execute (based on the user's request) and execute the tools.

An MCP client can communicate with multiple MCP servers, which can all host multiple tools.