Back to Templates

This n8n template demonstrates how to obtain token usage from AI Agents and places the data into a spreadsheet that calculates the estimated cost of the execution.

Obtaining the token usage from AI Agents is tricky, because it doesn't provide all the data from tool calls. This workflow taps into the workflow execution metadata to extract token usage information.

Works well with OpenAI, Google and Anthropic. Other LLM providers might need small tweaks.

How it works

- The AI Agent executes and then calls a subworkflow to calculate the token usage.

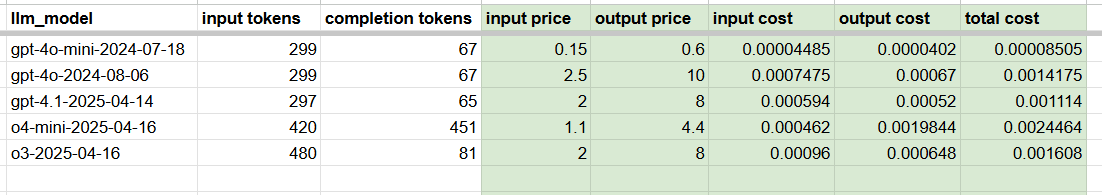

- The data is stored in Google Sheets

- The spreadsheet has formulas to calculate the estimated cost of the execution.

How to use

- The AI Agent is used as an example. Feel free to replace this with other agents you have.

- Call the subworkflow AFTER all the other branches have finished executing.

Requirements

- LLM account (OpenAI, Gemini...) for API usage.

- Google Drive and Sheets credentials

- n8n API key of your instance