This workflow contains community nodes that are only compatible with the self-hosted version of n8n.

Monetize Your Private LLM Models with x402 and Ollama

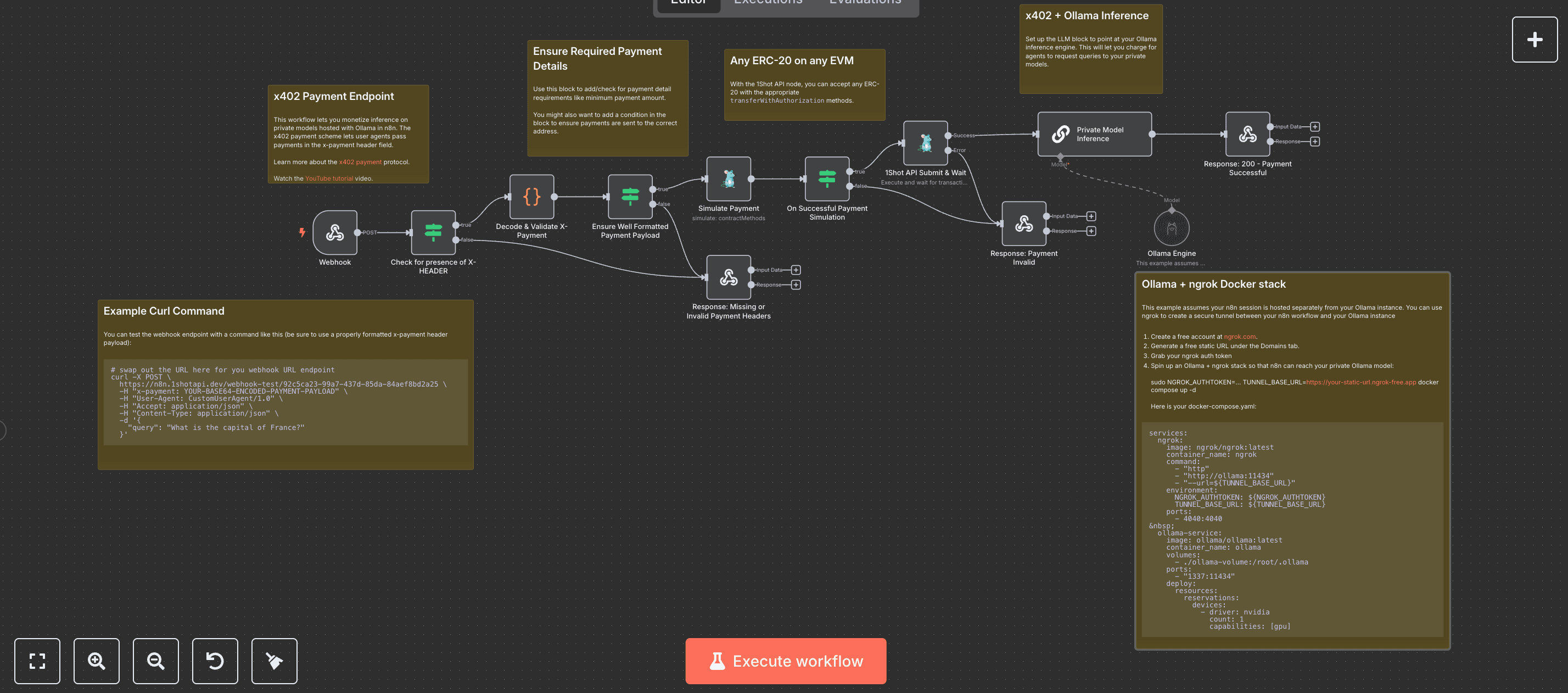

Self-hosting custom LLMs is becoming more popular and easier with turn-key inferencing tools like Ollama. With Ollama you can host your own proprietary models for customers in a private cloud or on your own hardware.

But monetizing custom-trained, propietary models is still a challenge, requiring integrations with payment processors like Stripe, which don't support micropayments for on-demand API consumption.

With this free workflow you can quickly monetize your proprietary LLM models with the x402 payment scheme in n8n with 1Shot API.

Setup Steps:

- Authenticate the 1Shot API node against your 1Shot API business account.

- Point the 1Shot API simulate and execute nodes at the x402-compatible payment token you'd like to receive as payment.

- Configure the Ollama n8n node in the workflow (with optional authentication) to forward to your Ollama API endpoint and let users query it through an n8n webhook endpoint while paying you directly in your preferred stablecoin (like USDC).

Through x402, users and AI agents can pay per-inference, whith no overhead wasted on centralized payment processors.

Walkthrough Tutorial

Check out the YouTube tutorial for this workflow so see the full end-to-end process.