Back to Templates

Split Test AI Prompts Using Supabase & Langchain Agent

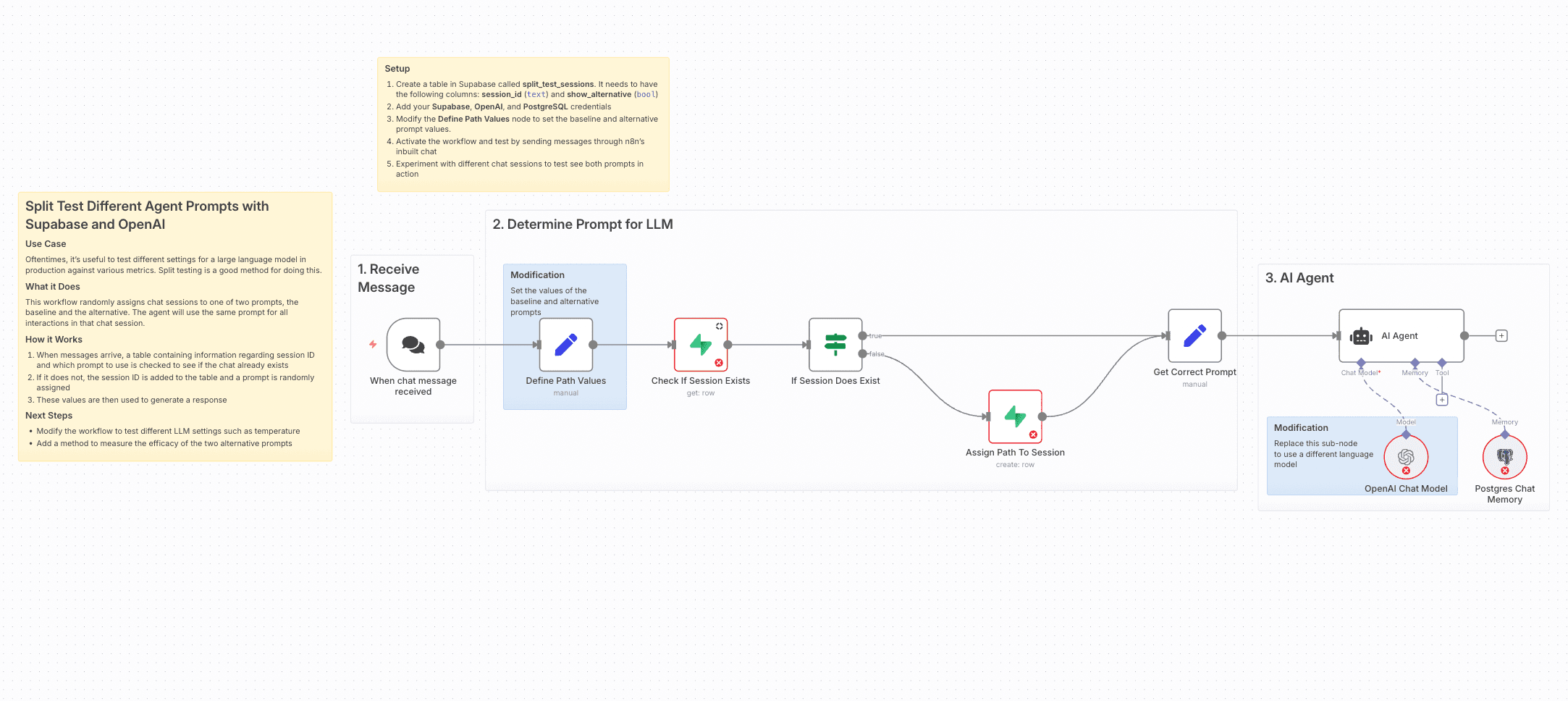

This workflow allows you to A/B test different prompts for an AI chatbot powered by Langchain and OpenAI. It uses Supabase to persist session state and randomly assigns users to either a baseline or alternative prompt, ensuring consistent prompt usage across the conversation.

🧠 Use Case

Prompt optimization is crucial for maximizing the performance of AI assistants. This workflow helps you run controlled experiments on different prompt versions, giving you a reliable way to compare performance over time.

⚙️ How It Works

- When a message is received, the system checks whether the session already exists in the Supabase table.

- If not, it randomly assigns the session to either the baseline or alternative prompt.

- The selected prompt is passed into a Langchain Agent using the OpenAI Chat Model.

- Postgres is used as chat memory for multi-turn conversation support.

🧪 Features

- Randomized A/B split test per session

- Supabase database for session persistence

- Langchain Agent + OpenAI GPT-4o integration

- PostgreSQL memory for maintaining chat context

- Fully documented with sticky notes

🛠️ Setup Instructions

- Create a Supabase table named

split_test_sessionswith the following columns:session_id(text)show_alternative(boolean)

- Add credentials for:

- Supabase

- OpenAI

- PostgreSQL (for chat memory)

- Modify the "Define Path Values" node to set your baseline and alternative prompts.

- Activate the workflow.

- Send messages to test both prompt paths in action.

🔄 Next Steps

- Add tracking for conversions or feedback scores to compare outcomes.

- Modify the prompt content or model settings (e.g. temperature, model version).

- Expand to multi-variant tests beyond A/B.