Disclaimer

This template is only available on n8n self-hosted as it's making use of the community node for MCP Client.

Who this is for?

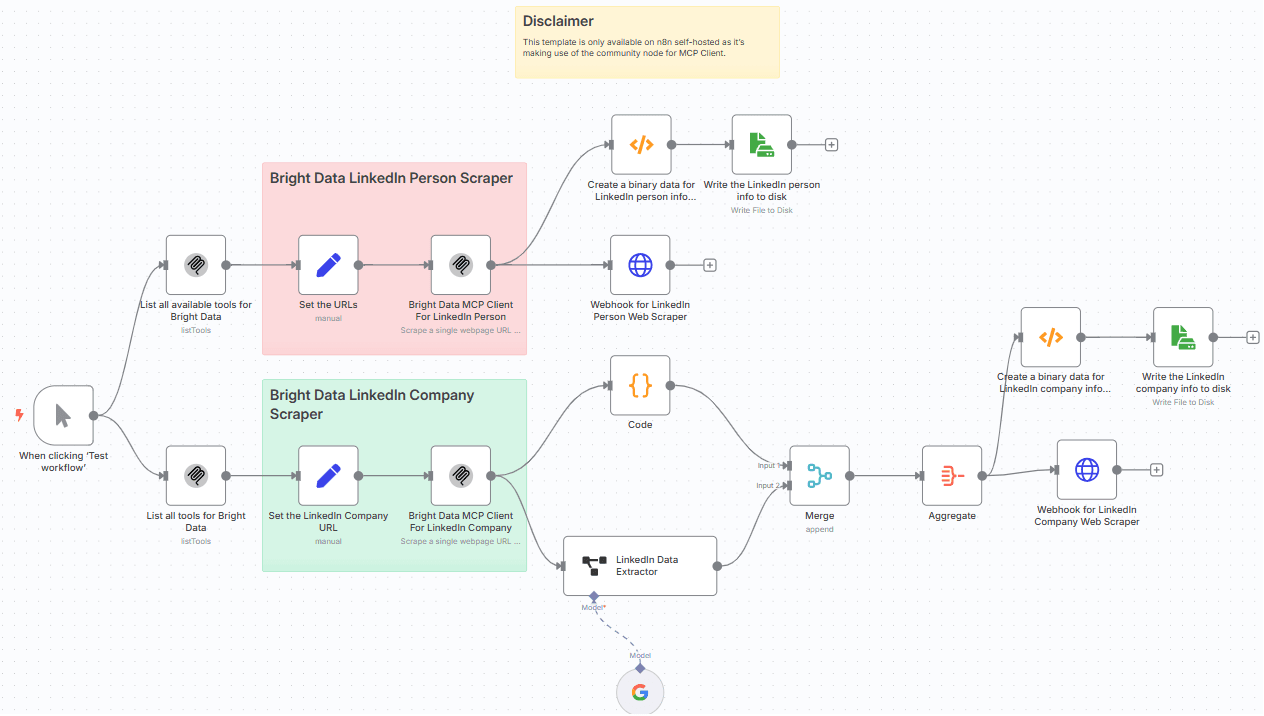

The Extract, Transform LinkedIn Data with Bright Data MCP Server & Google Gemini workflow is an automated solution that scrapes LinkedIn content via Bright Data MCP Server then transforms the response using a Gemini LLM. The final output is sent via webhook notification and also persisted on disk.

This workflow is tailored for:

-

Data Analysts : Who require structured LinkedIn datasets for analytics and reporting.

-

Marketing and Sales Teams : Looking to enrich lead databases, track company updates, and identify market trends.

-

Recruiters and Talent Acquisition Specialists : Who want to automate candidate sourcing and company research.

-

AI Developers : Integrating real-time professional data into intelligent applications.

-

Business Intelligence Teams : Needing current and comprehensive LinkedIn data to drive strategic decisions.

What problem is this workflow solving?

Gathering structured and meaningful information from the web is traditionally slow, manual, and error-prone.

This workflow solves:

-

Reliable web scraping using Bright Data MCP Server LinkedIn tools.

-

LinkedIn person and company web scrapping with AI Agents setup with the Bright Data MCP Server tools.

-

Data extraction and transformation with Google Gemini LLM.

-

Persists the LinkedIn person and company info to disk.

-

Performs a Webhook notification with the LinkedIn person and company info.

What this workflow does?

This n8n workflow performs the following steps:

-

Trigger: Start manually.

-

Input URL(s): Specify the LinkedIn person and company URL.

-

Web Scraping (Bright Data): Use Bright Data's MCP Server, LinkedIn tools for the person and company data extract.

-

Data Transformation & Aggregation: Uses the Google LLM for handling the data transformation.

-

Store / Output: Save results into disk and also performs a Webhook notification.

Pre-conditions

- Knowledge of Model Context Protocol (MCP) is highly essential. Please read this blog post - model-context-protocol

- You need to have the Bright Data account and do the necessary setup as mentioned in the Setup section below.

- You need to have the Google Gemini API Key. Visit Google AI Studio

- You need to install the Bright Data MCP Server @brightdata/mcp

- You need to install the n8n-nodes-mcp

Setup

- Please make sure to setup n8n locally with MCP Servers by navigating to n8n-nodes-mcp

- Please make sure to install the Bright Data MCP Server @brightdata/mcp on your local machine.

- Sign up at Bright Data.

- Navigate to Proxies & Scraping and create a new Web Unlocker zone by selecting Web Unlocker API under Scraping Solutions.

- Create a Web Unlocker proxy zone called mcp_unlocker on Bright Data control panel.

- In n8n, configure the Google Gemini(PaLM) Api account with the Google Gemini API key (or access through Vertex AI or proxy).

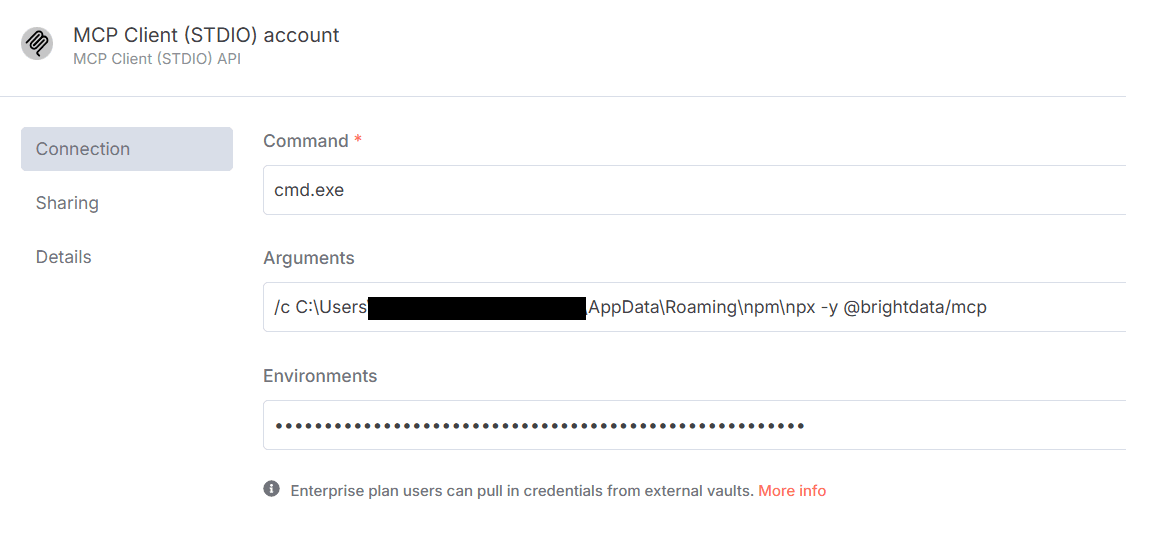

- In n8n, configure the credentials to connect with MCP Client (STDIO) account with the Bright Data MCP Server as shown below.

Make sure to copy the Bright Data API_TOKEN within the Environments textbox above as API_TOKEN=<your-token>. - Update the LinkedIn URL person and company workflow.

- Update the Webhook HTTP Request node with the Webhook endpoint of your choice.

- Update the file name and path to persist on disk.

How to customize this workflow to your needs

-

Different Inputs: Instead of static URLs, accept URLs dynamically via webhook or form submissions.

-

Data Extraction: Modify the LinkedIn Data Extractor node with the suitable prompt to format the data as you wish.

-

Outputs: Update the Webhook endpoints to send the response to Slack channels, Airtable, Notion, CRM systems, etc.