Google Gemini Chat Model and HTTP Request integration

Save yourself the work of writing custom integrations for Google Gemini Chat Model and HTTP Request and use n8n instead. Build adaptable and scalable AI, workflows that work with your technology stack. All within a building experience you will love.

How to connect Google Gemini Chat Model and HTTP Request

Create a new workflow and add the first step

In n8n, click the "Add workflow" button in the Workflows tab to create a new workflow. Add the starting point – a trigger on when your workflow should run: an app event, a schedule, a webhook call, another workflow, an AI chat, or a manual trigger. Sometimes, the HTTP Request node might already serve as your starting point.

Popular ways to use the Google Gemini Chat Model and HTTP Request integration

✨🤖Automate Multi-Platform Social Media Content Creation with AI

Ai-powered social media content generator & publisher

Respond to WhatsApp messages with AI like a pro!

Free AI image generator - n8n automation workflow with Gemini/ChatGPT

Proxmox AI agent with n8n and generative AI integration

Host your own AI deep research agent with n8n, Apify and OpenAI o3

Lead generation agent

🤖 AI content generation for auto service 🚘 automate your social media📲!

Automated AI content creation & Instagram publishing from Google Sheets

Build a PDF Document RAG System with Mistral OCR, Qdrant and Gemini AI

AI voice chat using webhook, Memory Manager, OpenAI, Google Gemini & ElevenLabs

Automated YouTube video scheduling & AI metadata generation 🎬

✨ Vision-based AI agent scraper - with Google Sheets, ScrapingBee, and Gemini

🤖 Create a Documentation Expert Bot with RAG, Gemini, and Supabase

Extract text from PDF and image using Vertex AI (Gemini) into CSV

Api schema extractor

Generate AI-Powered LinkedIn Posts with Google Gemini and Gen-Imager

5 ways to process images & PDFs with Gemini AI in n8n

Extract & transform HackerNews data to Google Docs using Gemini 2.0 flash

🤖💬 Conversational AI Chatbot with Google Gemini for Text & Image | Telegram

Cv resume PDF parsing with multimodal vision AI

AI content creation and auto WordPress publishing with Pexels API image workflow

Automated Instagram reels workflow

🤖 Build a Documentation Expert Chatbot with Gemini RAG Pipeline

Parse incoming invoices from Outlook using AI Document Understanding

Transcribing bank statements to markdown using Gemini Vision AI

Explore n8n nodes in a visual reference library

Automate YouTube uploads with AI-generated metadata from Google Drive

Extract invoice data from Google Drive to Sheets with Mistral OCR and Gemini

Easy image captioning with Gemini 1.5 Pro

AI-powered product research & SEO content automation

Transform long videos into viral shorts with AI and schedule to social media using Whisper & Gemini

High-level service page SEO blueprint report generator

Stock market daily digest with Bright Data scraping & Gemini AI email reports

📈 Receive daily market news from FT.com to your Microsoft Outlook inbox

Create faceless videos with Gemini, ElevenLabs, Leonardo AI & Shotstack

Create custom PDF documents from templates with Gemini & Google Drive

Generate AI YouTube shorts with Flux, Runway, Eleven Labs and Creatomate

Automated stock sentiment analysis with Google Gemini and EODHD News API

Generate UGC marketing videos for eCommerce with Sora 2 and Gemini

Auto-generate & post AI images to Facebook using Gemini & Pollinations AI

WhatsApp RAG chatbot with Supabase, Gemini 2.5 Flash, and OpenAI embeddings

Build and update RAG system with Google Drive, Qdrant, and Gemini Chat

Scrape Google Maps by area & generate outreach messages for lead generation

Resume screening & behavioral interviews with Gemini, Elevenlabs, & Notion ATS

Perform SEO keyword research & insights with Ahrefs API and Gemini 1.5 Flash

Google Maps business scraper & lead enricher with Bright Data & Google Gemini

Bitrix24 AI-Powered RAG Chatbot for Open Line Channels

LinkedIn profile extract and build JSON resume with Bright Data & Google Gemini

Doctor appointment management system with Gemini AI, WhatsApp, Stripe & Google Sheets

Automate Etsy data mining with Bright Data Scrape & Google Gemini

AI-powered product video generator (Foreplay + Gemini + Sora 2)

Analyze Facebook Ads & send insights to Google Sheets with Gemini AI

Visual regression testing with Apify and AI Vision Model

AI-powered candidate shortlisting automation for ERPNext

Hacker News throwback machine - see what was hot on this day, every year!

Automate salon appointment management with WhatsApp, GPT & Google Calendar

Turn BBC News articles into podcasts using Hugging Face and Google Gemini

Intelligent web query and semantic re-ranking flow using Brave and Google Gemini

Stock technical analysis with Google Gemini

Generate content strategy reports analyzing Reddit, YouTube & X with Gemini

Line chatbot with Google Sheets memory and Gemini AI

🤖🧑💻 AI agent for top n8n creators leaderboard reporting

Automate news publishing to LinkedIn with Gemini AI and RSS feeds

Auto-generate YouTube chapters with Gemini AI & YouTube Data API v3

Automate AI video creation & multi-platform publishing with Gemini & Creatomate

Generate & schedule social posts with Gemini/OpenAI for X and LinkedIn

University FAQ & calendar assistant with Telegram, MongoDB and Gemini AI

Learn anything from HN - get top resource recommendations from Hacker News

Text-to-image generation with Google Gemini & enhanced prompts via Telegram Bot

Automated viral content engine for LinkedIn & X with AI generation & publishing

Document analysis & chatbot creation with Llama Parser, Gemini LLM & Pinecone DB

Automated resume scoring with Gemini LLM, Gmail and Notion job profiles

Convert PDF documents to AI podcasts with Google Gemini and text-to-speech

Scrape Web Data with Bright Data, Google Gemini and MCP Automated AI Agent

Build a WhatsApp assistant with memory, Google Suite & multi-AI research and imaging

Auto-generate SEO articles in WordPress with Gemini AI and OpenAI images

Google search engine results page extraction and summarization with Bright Data

YouTube video to AI-powered auto blogging and affiliate automation

Personalized email outreach with LinkedIn & Crunchbase data and Gemini AI review

🛠️ Auto n8n updater (Docker)

Google trend data extract & summarization with Bright Data & Google Gemini

Multilingual voice & text Telegram bot with ElevenLabs TTS and LangChain agents

Build a multi-modal Telegram AI assistant with Gemini, voice & image generation

AI-powered auto-generate exam questions and answers from Google Docs with Gemini

Generate viral Facebook posts with Gemini 2.0 & AI image generation

LinkedIn job hunting & outreach automation with Apify, Gemini AI, and Gmail

Build a RAG system by uploading PDFs to the Google Gemini File Search Store

Extract pay slip data with Line Chatbot and Gemini to Google Sheets

AI-powered automated outreach with scheduling, Gemini, Gmail & Google Sheets

Deep research agent - automated research & Notion report builder

Extract Amazon best seller electronic info with Bright Data and Google Gemini

Automate job search with LinkedIn, Google Sheets & AI

Auto-generate tech news blog posts with NewsAPI & Google Gemini to WordPress

Build a RAG system with automatic citations using Qdrant, Gemini & OpenAI

Auto-generate LinkedIn content with Gemini AI: posts & images 24/7

Personal knowledgebase AI agent

Create YouTube videos daily from Google Sheets using MagicHour + Gemini

AI YouTube playlist & video analyst chatbot

Summarize youtube videos from transcript for social media

Generate AI songs + music videos using Suno API, Flux, Runway and Creatomate

AI-powered WhatsApp chatbot with Venom Bot & Google Gemini (no official API)

Generate video ads with Gemini 2.5 flash images & FAL WAN animation

Automate WhatsApp customer support with voice transcription, FAQ & appointment scheduling

🎓 Optimize Speed-Critical Workflows Using Parallel Processing (Fan-Out/Fan-In)

Smart LinkedIn job filtering with Google Gemini, CV matching, and Google Maps

Stock market analysis & prediction with GPT, Claude & Gemini via Telegram

Automated HR screening with VAPI AI calls, Gemini analysis & Google Sheets

Create AI-ready vector datasets for LLMs with Bright Data, Gemini & Pinecone

Summarize YouTube transcripts in any language with Google Gemini & Google Docs

Automated LinkedIn job hunter: get your best daily job matches by email

Analyze Meta Ad Library Video Ads with Gemini and store results in Google Sheets

AI email reply based on HubSpot data + Slack approval

Daily AI news briefing and summarization with Google Gemini and Telegram

Convert RSS feeds into a podcast with Google Gemini, Kokoro TTS, and FFmpeg

Automate job search & resume matching with LinkedIn, Gemini AI & Google Sheets

Search & summarize web data with Perplexity, Gemini AI & Bright Data to webhooks

Smart message batching AI-powered Facebook Messenger chatbot use Data Table

Qualify Meta ads leads with WhatsApp verification, Gemini AI & Zoho CRM

Indeed data scraper & summarization with Airtable, Bright Data & Google Gemini

Generate & publish AI cinematic videos to YouTube Shorts using VEO3 & Gemini

Extract specific website data with form input, Gemini 2.5 flash and Gmail

Generate YouTube scripts for shorts & long-form with Gemini AI and Tavily Research

Automate Google Ads search term analysis with Gemini AI and send to Slack

Scrape LinkedIn jobs with Gemini AI and store in Google Sheets using RSS

Search Google, Bing, Yandex & extract structured results with Bright Data MCP & Google Gemini

Build document RAG system with Kimi-K2, Gemini embeddings and Qdrant

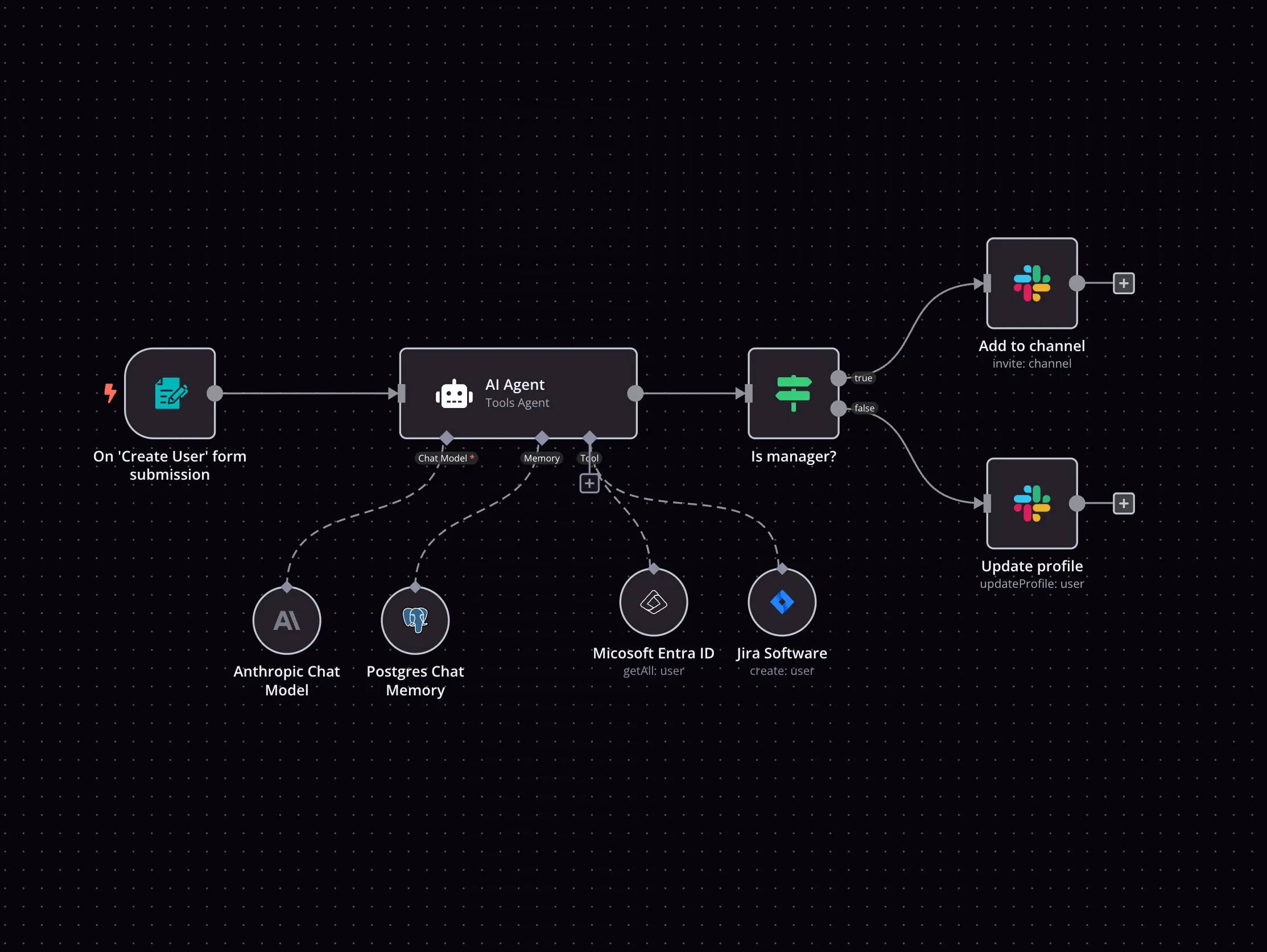

Build an AI IT support agent with Azure Search, Entra ID & Jira

YouTube transcription & translation to Google Docs with Gemini AI

Automate ITSM ticket classification and resolution using Gemini, Qdrant and ServiceNow

Automated video analysis: AI-powered insight generation from Google Drive

AI-Powered Vendor Policy & RSS Feed Analysis with Integrated Risk Scoring

US stocks earnings calendar AI updates to Telegram (Finnhub + Gemini)

Legal Case Research Extractor, Data Miner with Bright Data MCP & Google Gemini

Transform product photos into social media videos with Gemini AI, Kling & LATE

Create & auto-publish YouTube content with Gemini AI, face thumbnails & human review

Generate SEO-optimized WordPress blogs with Gemini, Tavily & human review

One telegram chat to edit, thumbnail & auto-post your videos everywhere

Transform YouTube videos into interactive MCQ quizzes with Google Forms & Gemini AI

Summarize Glassdoor company info with Google Gemini and Bright Data web scraper

Build a knowledge base chatbot with Jotform, RAG Supabase, Together AI & Gemini

Generate Shopify product listings from images with Gemini AI and Airtable

WhatsApp virtual agent with Gemini AI - handles text & voice with knowledge base

Generate AI video avatars from URLs with HeyGen, Gemini & upload to social media

Generate product images & videos with Gemini AI, DeepSeek, and GoAPI for e-commerce

Create stunning AI images directly from WhatsApp with Gemini

Generate SEO-optimized titles & meta descriptions with Bright Data & Gemini AI

WhatsApp-to-social-media content generation with GPT-4 & approval workflow

Build a multi-functional Telegram bot with Gemini, RAG PDF search & Google Suite

Generate AI images via Telegram using Gemini & Pollinations

Generate LinkedIn posts with Gemini content & Imagen images for instant publishing

Generate images with Pollinations & blog articles with Gemini 2.5 from Telegram

Multi-agent AI content creator for SEO blogs & newsletters with OpenRouter, DALL-E, Gemini

Auto-generate social media posts from URLs with AI, Telegram & multi-platform posting

Perplexity-style iterative research with Gemini and Google Search

AI candidate screening pipeline: LinkedIn to Telegram with Gemini & Apify

Generate & publish AI faceless videos to YouTube Shorts using Sora 2

Google SERP + trends and recommendations with Bright Data & Google Gemini

Generate market research reports from Google Maps reviews with Gemini AI

Automated meta ads analysis with Gemini AI, ScrapingFlash, and Google Sheets

Build a self-updating RAG system with OpenAI, Google Gemini, Qdrant and Google Drive

Transform podcasts into viral social media clips with Gemini AI and multi-platform posting

Create UGC style product ad video via Telegram + Gemini + Kie.ai’s Veo3.1 API

Ai blog generator for Shopify products using Google Gemini and Google Sheets

💅 AI Agents Generate Content & Automate Posting for Beauty Salon Social Media 📲

Scrape ProductHunt using Google Gemini

Facebook comment AI moderator with Notion & Gemini

Automate lead meeting scheduling with Zoho CRM, Google Calendar & Gemini AI

GPT-4o, RunwayML, ElevenLabs for Social Media

Automated property & restaurant bookings with AI voice calls via Telegram

Smart Amazon shopping assistant with Gemini AI and Telegram

Automate vendor due diligence research with Gemini & Jina AI

End of turn detection for smoother AI agent chats with Telegram and Gemini

Generate AI stock reports w/ fundamental, technical, & news analysis (Free APIs)

Festival social media automation with Gemini AI for X/Twitter & Facebook

Create AI nature videos with Sora 2 (Kie AI), Gemini, and send to Telegram

Gemini-powered Facebook comment & DM assistant with Notion

Auto-generate AI videos with Gemini, KIE AI Sora-2 & Blotato (Multi-Platform)

Auto-Generate & Publish SEO Blog Posts to WordPress with OpenRouter & Runware

End-to-end Ai blog research and writer with Gemini AI, Supabase and Nano-Banana

AI-powered news update bot for Zalo using Gemini and RSS feeds

Generate visual summary & knowledge graph insights for your email

Create e-commerce promotional carousels with Gemini 2.5 & social publishing

Analyze search intent for keywords with Google scraping, Bright Data, and Gemini AI

Automate cryptocurrency analysis & reports with Google Gemini and CoinGecko email alerts

Nano Banana/Gemini 2.5 Telegram bot with multi-modal functionality

Morning briefing podcast: generate daily summaries with Gemini AI, weather, and calendar

Auto-Translate YouTube Video Content with Google Gemini AI

Automate Solana trading with Gemini AI, multi-timeframe analysis & AFK Crypto

Analyze YouTube comments sentiment with Gemini AI and Google Sheets

IPL cricket rules Q&A chat bot using RAG and Google Gemini API

Web research assistant: automated search & scraping with Gemini AI and spreadsheet reports

Voice AI customer support for WooCommerce using VAPI, GPT-4o & Gemini with RAG

Automate code reviews for GitLab MRs with Gemini AI and JIRA context

Publish LinkedIn & X posts with Telegram Bot, Gemini AI & Vector Memory

Convert Fathom meeting transcripts to formatted Google Docs with AI summaries

Automated invoice data extraction with LlamaParse, Gemini 2.5 & Google Sheets

Extract university term dates from Excel using CloudFlare markdown conversion

Scrape & analyze Google Ads with Bright Data API and AI for email reports

Automate blog creation & publishing with Gemini, Ideogram AI and WordPress

Generate company stories from LinkedIn with Bright Data & Google Gemini

Extract, summarize & analyze Amazon price drops with Bright Data & Google Gemini

AI-powered email forwarding to WhatsApp with Gmail, Outlook & Google Gemini

Generate & publish AI videos with Sora 2, Veo 3.1, Gemini & Blotato

Generate and Publish SEO-Optimized Blog Posts to WordPress

Transform meeting transcripts into AI-generated presentations with Google Slides & Flux

Email news briefing by keyword from Bright Data with AI summary

Monitor new CVEs for bug bounty hunting with Gemini AI and Slack alerts

AppSheet intelligent query orchestrator- query any data!

Automated security alert analysis with Sophos, Gemini AI, and VirusTotal

Automate RSS-to-social media with AI summaries and image generation

Weekly competitor content digest with Gemini & OpenAI, Google Sheets, and Firecrawl

Generate an AI summary of your Notion comments

Summarize RSS news with Gemini AI and store in Google Sheets

Auto-comment on Reddit posts with AI brand mentions & Baserow tracking

Generate medical reports from emails with Gemini AI & Google Workspace

Landing page analyzing agent

WhatsApp dietitian AI chatbot workflow

Auto-generate AI videos and publish to YouTube with Gemini, KIE AI & Blotato

Extract, Transform LinkedIn Data with Bright Data MCP Server & Google Gemini

Automate social media posts from website articles with Gemini AI, LinkedIn & X/Twitter

Enrich Company Data from LinkedIn via Bright Data & Google Sheets

Landing page conversion optimizer with Gemini 2.5-pro & Telegram

Structured data extract & data mining with Bright Data & Google Gemini

Ai-powered email triage & auto-response system with OpenAI agents & Gmail

Fraudulent booking detector: Identify suspicious travel transactions with Google Gemini

Automate weekly tutorials from trending GitHub repos with Gemini AI to WordPress

Auto-generate & post LinkedIn carousels with Gemini AI and Post Nitro

Monitor core web vitals with Lighthouse, Gemini AI, Telegram alerts & Google Sheets

Daily RAG research paper hub with arXiv, Gemini AI, and Notion

Monitor AI research papers with Gemini-powered filtering and email summaries

Twitter content automation with Gemini AI for tweets, images, and engagement

Fast-track CV screening with AI analysis from Gmail to Slack and Google Sheets

Personalized cold email generator with Supabase, Smartlead & Google Gemini AI

Financial news digest with Google Gemini AI to Outlook email

Discover viral social media trends with Gemini Flash & Apify scraping

Generate academic assignments with Google Gemini & deliver via Telegram/PDF

AI video automation engine - generate & publish YT shorts with Veo-3 or Sora 2

Reddit Sentiment Analysis for Apple WWDC25 with Gemini AI and Google Sheets

AI-powered YouTube meta generator with GPT-4o, Gemini & content enrichment

Extract & summarize Bing Copilot search results with Gemini AI and Bright Data

Generate SEO meta tags with Gemini AI & competitor analysis using Google Sheets

Ai-powered multi-stage web search and research suite

Extract and structure Hacker News job posts with Gemini AI and save to Airtable

Generate a legal website accessibility statement with AI and WAVE

Extract & summarize Indeed company info with Bright Data and Google Gemini

YouTube comment sentiment analysis with Google Gemini AI and Google Sheets

Turn new Shopify products into SEO blogs with Perplexity, Gemini and Sheets

Automate data extraction from faxes & PDFs using Google Gemini and Google Sheets

Match CVs to job descriptions with Gemini analysis & email reports

Automate CV screening & analysis with Telegram, Gemini AI & Google Workspace

Conversational sales agent for WooCommerce with GPT-4, Stripe and CRM integration

Ai email triage & alert system with GPT-4 and Telegram notifications

Automate RSS to Medium publishing with Groq, Gemini & Slack approval system

Segment PDFs by table of contents with Gemini AI and Chunkr.ai

Create hours-long wave music videos with Suno, ffmpeg-api and YouTube

Extract & analyze brand content with Bright Data and Google Gemini

Automate legal lien documents with Gemini AI, Apify, and Google Workspace

Ai-powered lead research & qualification using Relevance AI

Automate meeting notes summaries with Gemini AI & Slack notifications

Automate daily Hindu festival posts on X with Google Gemini and GPT-4o Mini 🤖

Automated YouTube publishing from Drive with GPT & Gemini metadata generation

YouTube report generator

Auto-curate & post LinkedIn company page using RSS + Gemini AI + Templated.io

Extract & summarize Wikipedia data with Bright Data and Gemini AI

Generate MemeCoin Art with Gemini Flash & NanoBanana and Post to Twitter

Audio transcription & chat bot with AssemblyAI, Gemini, and Pinecone RAG

Automate video creation with Gemini Prompts and Vertex AI to Google Drive

Generate AI-curated Reddit digests for Telegram, Discord & Slack with Gemini

Automate Gmail labeling with Gemini AI & build InfraNodus knowledge graph with Telegram alerts

Generate AI short-form health videos with Gemini, Veo 3 and Google Sheets

Extract & search ProductHunt data with Bright Data MCP and Google Gemini AI

💾 Generate Blog Posts on Autopilot with GPT‑5, Tavily and WordPress

AI WhatsApp support with human handoff using Gemini, Twilio, and Supabase RAG

Automate UX research planning with Gemini AI, Google Docs, and human feedback

Generate stock trading signals with Gemini 2.5 Pro & TwelveData via Telegram Bot

Filter real-time news with Gemini AI and BrowserAct for Telegram channels

Automated ticket triage for HaloPSA with Gemini AI summary generation

Support call analysis & routing with Gemini AI, ElevenLabs & Telegram alerts

Multilingual YouTube metadata translator with Gemini AI and Google Sheets

SOL trading recommendations w/ multi-timeframe analysis using Gemini & Telegram

Build a Facebook Messenger customer service AI chatbot with Google Gemini

Multi-agent salon appointment management with Telegram, GPT5-mini & Claude MCP

Generate LinkedIn agency content with GPT‑4o, Claude 4.5 and Gemini

Daily Gemini-powered global trend analysis with GDELT, NewsAPI & Discord

Scrape & enrich Google Maps leads with Decodo API and Gemini 2.5 Flash

Generate answer engine optimization strategy with Firecrawl, Gemini, OpenAI

Automate GitHub PR linting with Google Gemini AI and auto-fix PRs

Analyze competitor content gaps with Gemini AI, Apify & Google Sheets

Convert training prescriptions to Intervals.icu workouts with Claude Opus AI

KaizenCrypto - smarter crypto decisions with multi-timeframe AI analysis

Automated Feedaty Review Scraper 📈 using ScrapegraphAI & Gemini 3

Automate SEO blog creation from trends using Gemini AI, Apify, and Google Docs

Ideal customer profile (ICP) generation: AI, Firecrawl, Gemini, Telegram

Automate Morning Brew–style Reddit Digests and Publish to DEV using AI

Analyze Crunchbase startups by keyword with Bright Data, Gemini AI & Google Sheets

AI-powered Elementor blog post automation system for agencies with Gemini

Automated expense tracking with AI receipt analysis & Google Sheets

Extract structured data from Brave Search with Bright Data MCP & Google Gemini

Create daily Google Alerts digest with Gemini AI summarization and Gmail

Forum pulse for Telegram: community monitoring with Gemini & Groq AI models

Aggregate Twitter/X content to Telegram channel with BrowserAct & Google Gemini

Automated crypto market summaries using Gemini AI and CoinGecko data

Telegram fitness bot: Custom workout plans from photo/text using Gemini AI

Automated daily AI news digest: scrape, categorize & save to Google Sheets

📑 Anonymize & reformat CVs with Gemini AI, Google Sheets & Apps Script

Generate personalized sales outreach from LinkedIn job signals with Apify & Google Gemini

Automate quiz creation from documents with Google Gemini and Jotform

Product review analysis with BrowserAct & Gemini-powered recommendations

YouTube to telegram summary bot with Decodo & Gemini AI

WhatsApp AI agent that understand text, image , audio

Automate multilingual Slack communication (JA ⇄ EN) with Gemini 2.5 Flash

Product concept to 3D showcase with Claude AI & DALL-E packaging design

ZenTrade - AI Stock Trade Advisor

Extract and analyze web data with Bright Data & Google Gemini

Create and publish AI avatar short videos with Gemini, HeyGen and Google Sheets

Record transactions & generate budget reports with Gemini AI, Telegram & Firefly III

Automate meeting summaries with Google Drive, Gemini AI & Google Docs

Indeed job matching to Telegram with BrowserAct, Gemini & Telegram

AI text-to-image for Telegram (Gemini + Hugging Face FLUX)

WordPress blog generation with AI Research, Image Creation & Auto Publishing

Goodreads quote extraction with Bright Data and Gemini

Generate & post unique MCQ polls to Telegram with Gemini AI and Google Sheets

AI-powered lead processing from Apify with Gemini and Google Sheets

Build enterprise RAG system with Google Gemini file search & retell AI voice

Agentic B2B lead enrichment from Google Search to Google Sheets

Build a knowledge-based WhatsApp assistant with RAG, Gemini, Supabase & Google Docs

Send daily inspirational quotes with Gemini translation to Telegram subscribers

Generate content strategy insights from Reddit & X using Gemini AI analysis

Create WooCommerce products via Telegram bot with Google Gemini AI

AI-powered security analysis for n8n with Google Gemini and n8n audit API

AI-powered multi-channel lead outreach with JotForm, Gemini AI & HeyReach

Generate AI-powered markdown posts from workflow JSON with Gemini & LlamaIndex

Generate Interior Moodboards with Claude/Gemini Agents, Hugging Face Image Generation, and PDF Export

YouTube channel monitor with Gemini AI transcription & summarization

Automated Blog Creation & Multi-Platform Publishing with GPT/Gemini & WordPress

WhatsApp receipt OCR & AI data extraction with Twilio, LlamaParse & Gemini

Product ingredient safety analyzer with Google Gemini via WhatsApp

AI-powered lead qualification with Zoho CRM, People Data Labs and Google Gemini

Qualify & verify leads with Google Gemini and sync to HubSpot from Jotform

Generate & publish professional video ads with Veo 3, Gemini & Creatomate

Transform quotes to viral videos with Gemini, GPT & ElevenLabs for social media

Build a website-powered customer support chatbot with Decodo, Pinecone and Gemini

Extract and log data to Airtable with Google Gemini, ILovePDF, and Google Drive

Send AI-personalized LinkedIn connection requests from Google Sheets with Gemini

Create AI product review videos with Gemini, Veo 3, Blotato and Google Sheets

Monitor brand mentions on X with Gemini AI visual analysis & Telegram alerts

Generate personalized and aggregate survey reports with Jotform and Gemini AI

LinkedIn email finder with AI domain detection using Google Sheets & Hunter.io

Screen job applicants with Gemini AI: Jotform to Notion hiring pipeline

Summarize YouTube videos with Gemini AI and send via Telegram

Track meal nutrition from meal photos with LINE, Google Gemini and Google Sheets

Send AI-enhanced economic calendar alerts to Telegram with Gemini-2.0-Flash

Get IPO calendar alerts via Telegram with Finnhub API and Gemini AI

Auto-generate competitive battle cards from websites with Zoho CRM and Gemini AI

Automate Shopify product CSV from images using Gemini, GPT-4o, Google Drive & Sheets

Create and publish AI videos with Sora 2 Cameos, Gemini, and Blotato

Create AI shorts with HeyGen, Creatomate, Replicate, Gemini and OpenAI

Music Producer Chatbot 🎵🎤 using Gemini + Suno (via Kei AI) & Google Drive Upload

Automate LinkedIn content using viral post analysis with Gemini AI & Flux image generation

Generate & publish SEO-optimized Shopify blog articles with Gemini & ChatGPT

Scrape high-engagement LinkedIn posts and auto-post with Gemini images

Create an all-in-one Discord assistant with Gemini, Llama Vision & Flux images

Automated UPSC current affairs digest from The Hindu to Google Sheets with Gemini AI

Generate AI-curated sales quotes with OpenAI, Qdrant & CraftMyPDF PDF delivery

X (Twitter) brand sentiment analysis with Gemini AI & Slack alerts

Create AI-driven social media posts and publish to all major platforms

Generate and publish AI short videos using Gemini, Sora-2, Apify, and Google Sheets

Automated LinkedIn lead enrichment pipeline using Apollo.io & Google Sheets

Automate HR celebrations with Google Gemini, Sheets & Chat for team milestones

Create and publish AI recipe infographics with Gemini, Nanobanana Pro and Blotato

Extract invoice data from PDFs with Gemini AI to Google Sheets 📄

Publish SEO blogs to WordPress with GPT-4.1, DALL-E, Gemini, and Google Sheets

Generate and deploy websites with Gemini AI and Netlify auto-deployment

AI-powered NDA review & instant alert system - Jotform, Gemini, Telegram

Analyze customer sentiment with Zoho CRM, Google Gemini & send Gmail alerts

Generate and publish Instagram carousels with Gemini and Google Slides

Create and publish SEO blog posts using Google Sheets, Gemini/OpenAI, Ideogram, and WordPress

Automated job scraping with SerpAPI, Gemini AI filter & email notifications

Automate rental agreements with BoldSign, Google Sheets & Gemini AI

Automate business card management with LINE, AI, and Google Sheets

Create tutorial videos from documentation URLs with Claude, Google TTS, Remotion and YouTube

Smart human takeover & auto pause AI-powered Facebook Messenger chatbot

Generate Shopify collection blog posts with Perplexity, Gemini and Google Sheets

Detect duplicate form submissions & send AI responses with Jotform and Gemini

Manage Streak CRM via WhatsApp using GPT‑4.1 and Gemini

Generate beauty brand hashtags with Gemini AI, website analysis and SerpAPI

WordPress SEO publisher with Anthropic AI, Google Docs & media auto

Auto-track Amazon prices with Google Gemini & send alerts to Telegram

Generate content ideas from social trends with Apify, Gemini, and Google Sheets

Optimize Resumes & Generate Cover Letters with Gemini AI and PDF.co

Automated receipt processing for cashback with Jotform, Gemini 2.5 & Notion

Qualify CSV leads, enrich emails, and deliver results with Google Sheets, Drive, WhatsApp, and GPT-5-NANO

Filter URLs with AI-powered robots.txt compliance & source verification

Turn HR news into policy update tasks and a weekly Gmail digest with RSS, Google Drive, Gemini, and GPT-5.2

Estimate construction costs from text with Telegram, OpenAI and DDC CWICR

Generate visual resumes from Telegram using Google Gemini and BrowserAct

Automated lead qualification for JotForm with Google Gemini & Telegram alerts

Create sprint goals from Google Sheets with Pega Agile Studio and Google Gemini

Create Shopify products via a Telegram bot with Google Gemini AI

Create WordPress posts and Telegram updates from links with BrowserAct and Gemini

Create active learning notes from YouTube videos with Gemini and Notion

Generate short joke videos from Google Sheets with Google Gemini and Wavespeed AI

Convert emailed timesheets into QuickBooks invoices with OCR, AI, Gmail and Sheets

Monitor and alert stalled deals in Zoho CRM with Gemini AI and Gmail

Import Faire products to Shopify using BrowserAct, Gemini, and Telegram

KodoFlow - Futures & Options Trading Copilot

Match job descriptions with resumes using Google Gemini and log scores to Google Sheets

Generate product-aware B2B leads and outreach emails with Gemini, Pinecone and Gmail

Convert GitHub commits into review-ready pull requests with Google Gemini

Repurpose blog articles into social media posts with Google Gemini AI

Verify service providers via Telegram using BrowserAct and Google Gemini

Post images from Google Drive to Discord using Gemini Gemini AI

Auto-reply to Telegram messages using BrowserAct and Google Gemini

Generate Google Sheets test script from Pega Agile Studio user stories with AI

Scrape Skool community data using the Olostep API and Google Sheets

Enrich and score B2B company leads with Clearbit, Hunter.io, and Gemini AI

Handle LINE real estate inquiries with Google Gemini and smart lead detection

Enrich and score Japanese B2B leads with gBizINFO, web scraping, and Gemini AI

Review GitHub pull requests with Gemini and post feedback automatically

Generate LinkedIn posts using Telegram, Supabase vector DB and OpenAI RAG

Extract sales insights from Scoot call transcripts using Gemini

Score and route leads with Google Gemini, Sheets, Slack and Gmail

Monitor Google reviews and draft AI responses with Gemini and Slack

Analyze YouTube video SEO and get optimization tips with Gemini AI, Sheets, Slack, and Gmail

Build your own Google Gemini Chat Model and HTTP Request integration

Create custom Google Gemini Chat Model and HTTP Request workflows by choosing triggers and actions. Nodes come with global operations and settings, as well as app-specific parameters that can be configured. You can also use the HTTP Request node to query data from any app or service with a REST API.

Google Gemini Chat Model and HTTP Request integration details

Google Gemini Chat Model

Google Gemini Chat Model node docs + examples

Google Gemini Chat Model credential docs

See Google Gemini Chat Model integrations

Related categories

HTTP Request

Related categories

FAQ

Can Google Gemini Chat Model connect with HTTP Request?

Yes, Google Gemini Chat Model can connect with HTTP Request using n8n.io. With n8n, you can create workflows that automate tasks and transfer data between Google Gemini Chat Model and HTTP Request. Configure nodes for Google Gemini Chat Model and HTTP Request in the n8n interface, specifying actions and triggers to set up their connection.

Can I use Google Gemini Chat Model’s API with n8n?

Yes, with n8n, you can programmatically interact with Google Gemini Chat Model’s API via pre-defined supported actions or raw HTTP requests. With the HTTP Request node, you create a REST API call. You need to understand basic API terminology and concepts.

Can I use HTTP Request’s API with n8n?

Yes, with n8n, you can programmatically interact with HTTP Request’s API via pre-defined supported actions or raw HTTP requests. With the HTTP Request node, you create a REST API call. You need to understand basic API terminology and concepts.

Is n8n secure for integrating Google Gemini Chat Model and HTTP Request?

Yes, it is safe to use n8n to integrate Google Gemini Chat Model and HTTP Request. n8n offers various features to ensure the safe handling of your data. These include encrypted data transfers, secure credential storage, RBAC functionality, and compliance with industry-standard security practices (SOC2 compliant). For hosted plans, data is stored within the EU on servers located in Frankfurt, Germany. You can also host it on your own infrastructure for added control.

Learn more about n8n’s security practices here.

How to get started with Google Gemini Chat Model and HTTP Request integration in n8n.io?

To start integrating Google Gemini Chat Model and HTTP Request in n8n, you have different options depending on how you intend to use it:

- n8n Cloud: hosted solution, no need to install anything.

- Self-host: recommended method for full-control or customized use cases.

Unlike other platforms that charge per operation, step, or task, n8n charges only for full workflow executions. This approach guarantees predictable costs and scalability, no matter the complexity or volume of your workflows.

Need help setting up your Google Gemini Chat Model and HTTP Request integration?

Discover our latest community's recommendations and join the discussions about Google Gemini Chat Model and HTTP Request integration.

Google Verification Denied

Moiz Contractor

Describe the problem/error/question Hi, I am getting a - Google hasnt verified this app error. I have Enable the API, the domain is verified on the Cloud Console, the user is added in the search console and the google do…

Open topic

HTTP request, "impersonate a user" dynamic usage error

theo

Describe the problem/error/question I a http request node, I use a Google service account API credential type. I need the “Impersonate a User” field to be dynamic, pulling data from the “email” field in the previous nod…

Open topic

Why is my code getting executed twice?

Jon

Describe the problem/error/question I have a simple workflow that retrieves an image from url with http node and prints the json/binary in code. I have a few logs, but I am confused why I see duplicate messages for each …

Open topic

How to send a single API request with one HTTP node execution, but an array of parameters in it (like emails[all]?)?

Dan Burykin

Hi! I’m still in the beginning. Now I need to make an API call via HTTP node, and send all static parameters, but with the array of emails parameter (named it wrongly just to show what I need {{ $json.email[all] }}). Wo…

Open topic

Start a Python script with external libraries - via API or Command Execution?

Tony

Hi! I have a question: I am making an app that allows a person to scrape some data via a Python library. I have a Python script that needs to be triggered after certain user actions. What is the best way to: Send a p…

Open topic

Looking to integrate Google Gemini Chat Model and HTTP Request in your company?

The world's most popular workflow automation platform for technical teams including

Why use n8n to integrate Google Gemini Chat Model with HTTP Request

Build complex workflows, really fast