Google Gemini Chat Model and Telegram integration

Save yourself the work of writing custom integrations for Google Gemini Chat Model and Telegram and use n8n instead. Build adaptable and scalable AI, workflows that work with your technology stack. All within a building experience you will love.

How to connect Google Gemini Chat Model and Telegram

Create a new workflow and add the first step

In n8n, click the "Add workflow" button in the Workflows tab to create a new workflow. Add the starting point – a trigger on when your workflow should run: an app event, a schedule, a webhook call, another workflow, an AI chat, or a manual trigger. Sometimes, the HTTP Request node might already serve as your starting point.

Popular ways to use the Google Gemini Chat Model and Telegram integration

✨🤖Automate Multi-Platform Social Media Content Creation with AI

Free AI image generator - n8n automation workflow with Gemini/ChatGPT

🤖 AI powered RAG chatbot for your docs + Google Drive + Gemini + Qdrant

🤖 AI content generation for auto service 🚘 automate your social media📲!

Nutrition tracker & meal logger with Telegram, Gemini AI and Google Sheets

🤖💬 Conversational AI Chatbot with Google Gemini for Text & Image | Telegram

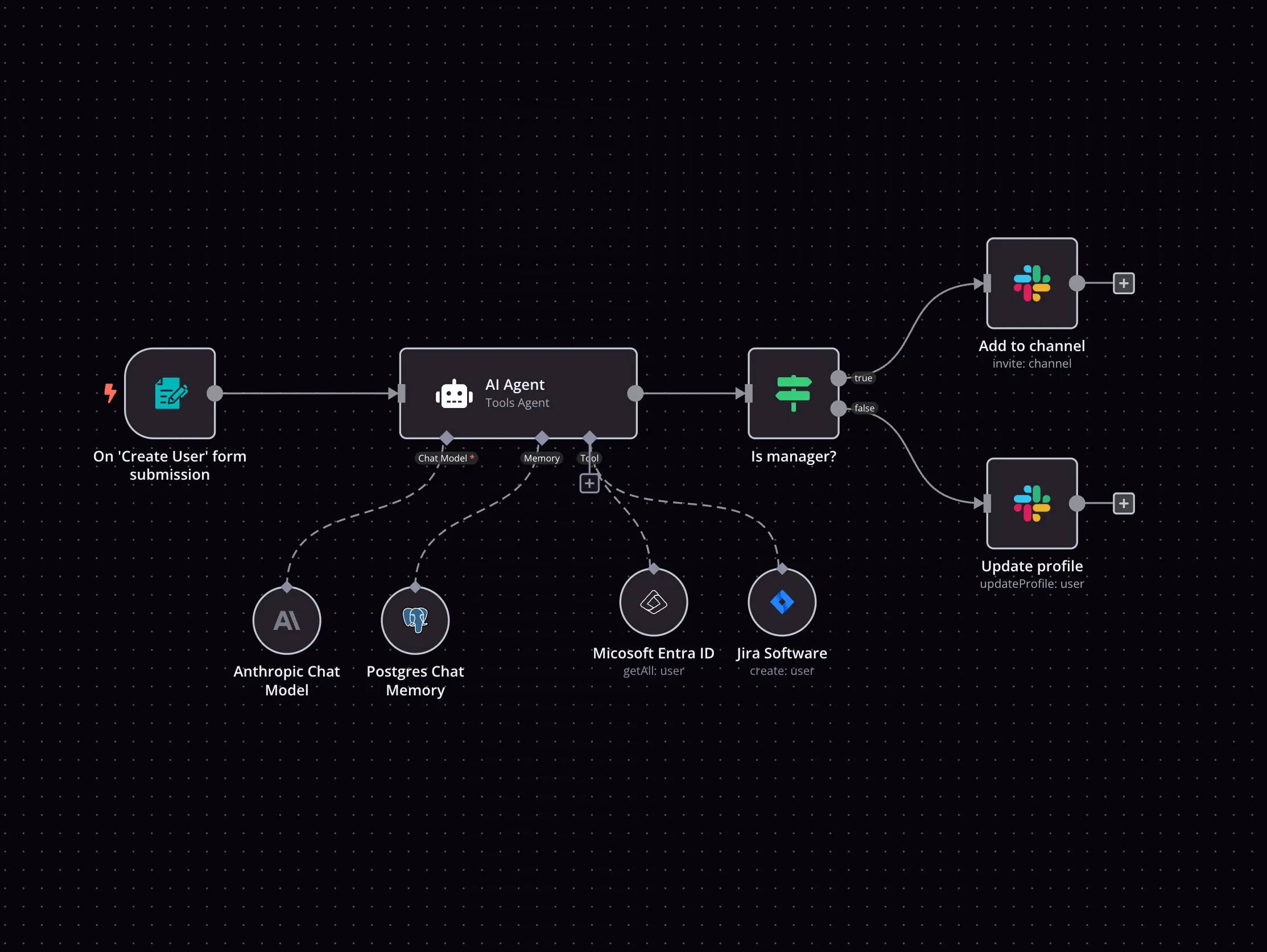

Build your own Google Gemini Chat Model and Telegram integration

Create custom Google Gemini Chat Model and Telegram workflows by choosing triggers and actions. Nodes come with global operations and settings, as well as app-specific parameters that can be configured. You can also use the HTTP Request node to query data from any app or service with a REST API.

Telegram supported actions

Get

Get up to date information about a chat

Get Administrators

Get the Administrators of a chat

Get Member

Get the member of a chat

Leave

Leave a group, supergroup or channel

Set Description

Set the description of a chat

Set Title

Set the title of a chat

Answer Query

Send answer to callback query sent from inline keyboard

Answer Inline Query

Send answer to callback query sent from inline bot

Get

Get a file

Delete Chat Message

Delete a chat message

Edit Message Text

Edit a text message

Pin Chat Message

Pin a chat message

Send Animation

Send an animated file

Send Audio

Send a audio file

Send Chat Action

Send a chat action

Send Document

Send a document

Send Location

Send a location

Send Media Group

Send group of photos or videos to album

Send Message

Send a text message

Send and Wait for Response

Send a message and wait for response

Send Photo

Send a photo

Send Sticker

Send a sticker

Send Video

Send a video

Unpin Chat Message

Unpin a chat message

Google Gemini Chat Model and Telegram integration details

Google Gemini Chat Model

Google Gemini Chat Model node docs + examples

Google Gemini Chat Model credential docs

See Google Gemini Chat Model integrations

Related categories

Google Gemini Chat Model and Telegram integration tutorials

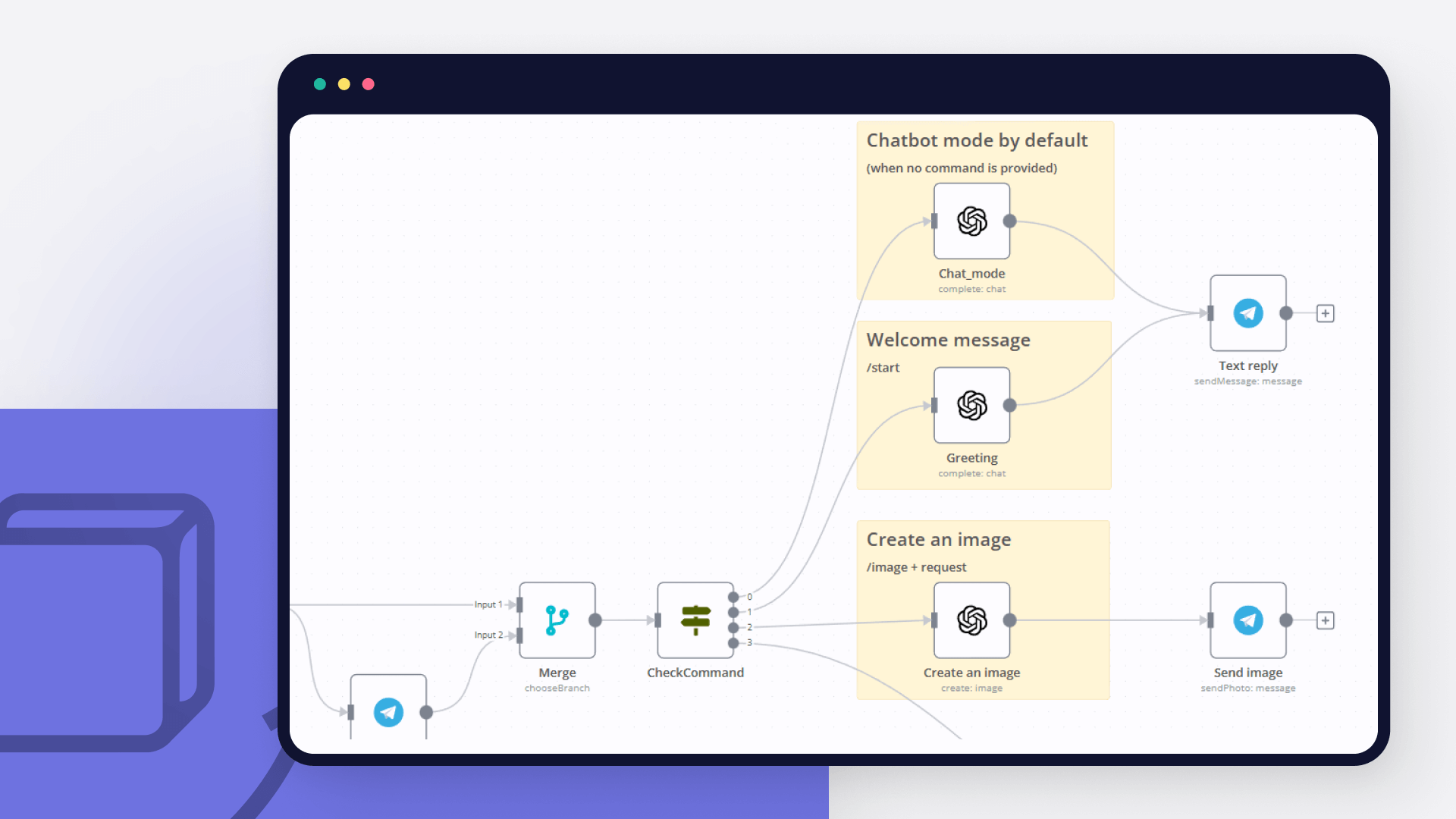

How to create an AI bot in Telegram

Learn how to create an AI chatbot for Telegram with our easy-to-follow guide. Ideal for users who are interested in exploring the realm of bot development without coding.

How to build a multilingual Telegram bot with low code

Learn how to build a multilingual bot for Telegram with a few lines of JavaScript code, a NocoDB database, and conditional logic in an automated workflow.

Create a toxic language detector for Telegram in 4 steps

Leverage the power of automation and machine learning to enable kinder online discussions.

6 e-commerce workflows to power up your Shopify store

Want to power up your online business and win back time? Discover how no-code workflow automation can help!

How to automate your reading habit just in time for World Poetry Day

Learn how to create a no-code workflow that gets international poems, translates them into one language, and sends you a poem in Telegram every day.

Using automation to boost productivity in the workplace

Instead of using IFTTT or Zapier, which can be pretty limiting on a free tier, I decided to try n8n, which is a fair-code licensed tool.

FAQ

Can Google Gemini Chat Model connect with Telegram?

Can I use Google Gemini Chat Model’s API with n8n?

Can I use Telegram’s API with n8n?

Is n8n secure for integrating Google Gemini Chat Model and Telegram?

How to get started with Google Gemini Chat Model and Telegram integration in n8n.io?

Need help setting up your Google Gemini Chat Model and Telegram integration?

Discover our latest community's recommendations and join the discussions about Google Gemini Chat Model and Telegram integration.

Looking to integrate Google Gemini Chat Model and Telegram in your company?

The world's most popular workflow automation platform for technical teams including

Why use n8n to integrate Google Gemini Chat Model with Telegram

Build complex workflows, really fast